Research & Innovation Roadmap

This Research & Innovation Roadmap, developed under the NexusForum.EU project and supported by Horizon Europe, outlines a research and innovation trajectory towards achieving a federated European multi-provider, AI-driven computing continuum, to enable and support data-driven innovation and AI deployments in Europe. It complements the technology developments of the European Alliance for Industrial Data, Edge and Cloud and the new IPCEI-CIS, providing a long-term vision bridging industry needs with excellent research in cloud, edge, and AI technologies.

Executive summary and report

Interactive preview

A European Perspective: Markets, Laws, and Initiatives

The report “The future of European competitiveness assesses the current state of European competitiveness, with a particular focus on industrial policy, its caveats and hopes for its future. Due to geopolitical instability, the urge of not depending on other countries is higher, because the EU has realized that dependency easily engenders instability. There has been a shift in paradigm, “The era of rapid world trade growth looks to have passed, with EU companies facing both greater competition from abroad and lower access to overseas markets”. Europe must radically change for digitalisation and decarbonisation to take place in the European economy, investments need to rise to 1960s-70s levels.

For growth to take place, three key areas have been identified to close the innovation gap with the US and China, i) activate a joint plan aimed at strengthening competitiveness ii) decarbonising the economy; iii) take action to enhance security, while reducing dependencies on third parties. In particular, three main barriers prevent Europe from growth.

The European Computing Continuum is one of the essential elements for the digital and green transition to take place within the EU and beyond, and the work of NexusForum serves as a facilitation instrument that on one hand asses the current landscape at the technological, economic and legal levels, and on the other, by engaging internally with academic and technical experts, and externally with industry experts, policy makers and more, it advises the European Commission on how.

European market context

Mario Draghi’s Report The future of European competitiveness mentions the lack of innovation as one of the reasons why the EU´s industry lacks dynamism. More specifically, it argues that this is due to the lifecycle of this innovation, starting from the initial product idea to its commercialisation. First, companies encounter financial barriers due to the quantity and the quality of funding dedicated to high risk, breakthrough, Research and Innovation (R&I) areas. In the EU, the capital market is smaller compared to the one of the US, and the Venture Capital sector is far less developed. Considering the global share of Venture Capitalist funds, the EU only raises around 5% of these, while the US accounts for more than half of them (52%), followed by China with a 40% share. Therefore, companies willing to scale-up in Europe, often seek alternative growth opportunities abroad, in markets where they are able to have more reach and have higher remunerative returns; in response to this, the demand for Venture Capital finance in Europe shrinks, alimenting a vicious circle. As a matter of fact, Europe lags behind in innovative technologies that have the power to drive productivity growth. With regards to the innovative technology of cloud, the numbers are loud and clear: 3 US tech giants (“hyperscalers”) account for more than 65% of the total share of the global cloud market.

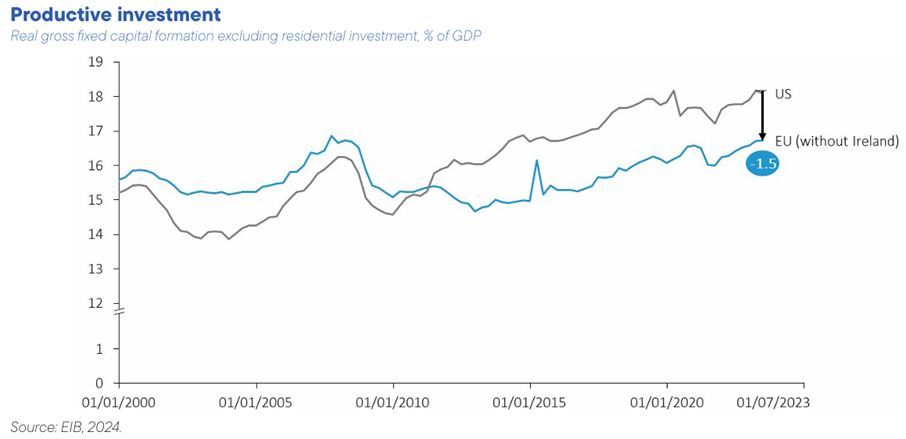

The share of GDP spent on Research and Innovation was twice for the US compared to the EU. This gap in innovation is directly linked to the gap in productive investment between the European and the US economy that is shown in the figure below.

European legal context

From a European legal context, the digital ecosystem is shaped by several key regulations and initiatives aimed at ensuring a fair, competitive, and secure digital environment. Thus, during the last decade Europe has been developing a regulatory framework aimed at establishing the future European Digital Single Market. Ensuring fair competition and fostering innovation, creating a safer digital space, giving individuals greater control over their data, and promoting digital sovereignty, inclusion, equality, and sustainability are among the primary objectives Europe aims to achieve with this regulatory framework.

Within this context, the European Computing Continuum and its associated activities are playing a crucial role in establishing relevant requirements and guiding the needs for the regulatory landscape. Legislation shapes the European Computing by

- Ensuring robust data protection measures across all stages of data processing.

- Encouraging the development and deployment of AI systems that are transparent, fair, and accountable.

- Creating a more open and competitive data economy, enabling innovation and collaboration.

These laws collectively aim to create a secure, fair, and innovative digital environment in Europe, balancing the need for data protection with the benefits of data-driven innovation.

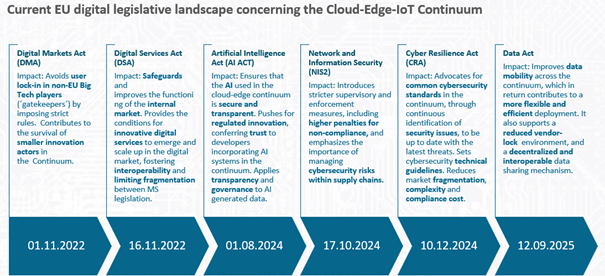

The current regulatory landscape in the European Cloud-Edge Continuum is summarised in the figure below, which shows the timeline and impact of key regulations. These regulations are further described under the figure and can be roughly grouped into regulations aiming to i) create a digital single market in Europe, ii) regulate how data and AI are used in Europe, and iii) safeguard the European digital environment.

European initiatives related to cloud and edge

To address the technical challenges, the EU has launched several initiatives to develop foundational cloud technologies in Europe, support the development of AI in Europe, and incentivise data sharing between organizations in Europe.

Towards a European Cognitive Computing Continuum

The progressive convergence between Cloud Computing and the Internet of Things (IoT) is resulting in a Computing Continuum. The multi-faceted concept of Edge Computing first became to represent a middle ground between data centres and IoT hyper-local networks of sensors and actuators (2020, H-CLOUD project)

The Compute Continuum can be seen a multi-dimensional manner. One dimension is from a technical prerogative: AI for Cloud-Edge, Cloud-Edge for AI and Telco Cloud -Edge. A second dimension is the Compute Continuum through the lens of Open-Source code, Digital Sovereignty, Interoperability and Cybersecurity, as well as energy consumption. A third dimension is markets, rules, policies and regulation. These aspects are particular to Europe, with its stricter rules on the use of personal information, AI and data. A fourth dimension is the missing components. This deliverable, the roadmap, outlines some of the missing components for Europe to produce a competitive Compute infrastructure.

Challenges and opportunities:

- Fragmented datacentre and cloud market (missing segments)

- EuroHPC JU and AI Factories

- Establishment of a European ecosystem based on RISC-V open standard

- Convergence of networking and compute and O-RAN

- Convergence of Operational Technologies and Information Technologies

- Data privacy and access to European data

Read more on this in the full Research & Innovation roadmap

Roadmap main topics

The section below provides an overview of the main topics and subtopics part of the Research & Innovation Roadmap

Transversal topics

Digital sovereignty

Open source

Sustainability

Cybersecurity

Interoperability

AI for Cloud-Edge: Orchestration and Managing a Multi-Provider Continuum

Cloud-Edge for AI: Enabling and Facilitating AI Applications Across the Continuum

Telco Cloud-Edge: Telco as One of the Main Tenants and Infrastructure Providers

Digitalisation of Industry Sectors: Requirements from Next-Gen Applications

Automotive and the continuum

Vehicles are becoming ‘computers on wheels’ with technology assistance for the drivers. Currently the assistance is often safety related. Fully automated tech. finds its way down the tech chain: cruise control, lane departure warnings, automatic crash signalling (OnStar in the US, Apple devices) to name a few.

Technology extends beyond the driver; computer-based maintenance, GPS-enabled theft electronic Vehicle Identification Numbers. An (e)SIM is commonplace in trucks, transmitting maintenance, wearage information to OEMs on behalf of hauliers that demand high uptimes using on demand service.

The industry is also changing how software is developed. Moving towards Software Defined Vehicle (SDV) architectures where features are updated continuously and uploaded over the air. Connectivity using cloud services offer new possibilities. Motivated by Telsa and Jenkins-style development, the SDV in the automotive sector spans the continuum from the cloud, edge and devices. Smart cities through roadside units for safety, road monitoring, autonomous driving exemplify a rich environment for the continuum.

Roadside Units (RSUs), as intermediatory storage and compute units, the automotive sector illustrates well the continuum. Vehicles store and upload data via RSUs an example of a working system is automated toll booths, transmitting and paying for the journey via IEEE 802.11p, WiFi with priority bands. Further developments will be cameras indicating VRUs, unforeseen icy roads or accident hot spots. RSUs demonstrate the use of edge compute as opposed to solely edge devices. Note, data sovereignty and privacy are key issues.

Augmented Reality/Virtual Reality technologies

Challenges: Extended reality (XR) technologies, such as augmented reality (AR) and virtual reality (VR), are expected to transform everyday life fundamentally, and enable new use cases and applications in industry. Due to the high data throughput and low latencies required to deliver a seamless VR/AR/XR experience, these technologies are among the main use cases for 5G and edge computing technologies.

R&D priorities:

- Consistency in Virtual Environments: Develop algorithms to ensure seamless and consistent user experiences across decentralized networks.

- Latency Reduction: Deploy and reinforce telecom and network infrastructure to minimize latency for real-time interaction within VR environments.

- AI Integration: Integrate AI to dynamically adapt and optimize VR experiences based on user interaction and environmental changes.

- Interoperability Standards: Establish standards to ensure interoperability among diverse VR platforms and decentralized computing resources.

- Secure Data Exchange: Create secure protocols for spatial data exchange in decentralized VR applications to protect user privacy and data integrity.

- Operational Datasets: Develop open operational datasets for training and evaluating AI models within VR scenarios.

Potential impact: VR/AR/XR are enabling new use cases and applications, with the potential of bringing great value to industry and enterprises.

Cyberspace and physical space fusion

Challenges: Originating from Japan, the concept of “Society 5.0” envisions a connected cyberspace where AI surpasses human capabilities, feeding optimal outcomes back into the physical realm. Ensuring data privacy and security on the Cloud is paramount as AI systems process vast amounts of data. High latency can hinder performance, particularly in critical applications such as healthcare or autonomous vehicles, where real-time processing is essential. Additionally, AI systems must efficiently scale with increasing data volumes and user demand to maintain optimal performance. The computational power required for AI can be costly, especially with the utilization of large neural networks, thus affecting overall expenses. Interoperability is crucial as AI systems often need to integrate with various other technologies and data sources, necessitating seamless compatibility. Moreover, regulatory compliance, particularly regarding data protection regulations like GDPR in Europe, is vital for AI systems operating on the Cloud, emphasizing the importance of cross-border data flows and specialised legal expertise. Lastly, addressing ethical considerations surrounding AI’s integration into society, alongside ensuring data quality and standardization, remains imperative to harness its full potential while mitigating risks and limitations.

R&D priorities:

- Data Privacy and Security: There are risks associated with data breaches and unauthorized access, which necessitate robust encryption and security protocols.

- Latency and Performance: Cloud infrastructure must be optimized to minimize latency and provide the necessary computational power.

- Scalability: Cloud platforms need to provide flexible and scalable resources to accommodate the growth of AI applications without compromising performance.

- Cost: Organizations must manage the cost of Cloud resources effectively to make AI integration economically viable.

- Interoperability: Ensuring interoperability between different Cloud services and AI models requires standardized protocols and interfaces.

- Regulatory Compliance: AI Governance platforms: Automate the identification of regulatory changes and translation into enforceable policies, Risk management and lifecycle governance.

- Technical Expertise: Training and recruiting talent are necessary to bridge this gap and drive integration forward.

- Ethical Considerations: Ensure that AI systems are transparent, explainable, and aligned with human values.

- Data Quality and Standardization: Ensure that shared data is of high quality and standardized for interoperability.

Potential impact: The convergence of cyberspace and physical space envisages a seamless integration, facilitating smart cities and environments where data exchange between sensors and cyberspace optimizes resources and enhances quality of life. Society 5.0 embraces AI-driven analysis, wherein AI not only processes data but also offers feedback and solutions, augmenting decision-making. This feedback loop, empowered by AI, is anticipated to spawn new value across sectors, fostering economic growth, job creation, and societal well-being.

Disruptive impact initiatives

This page contains excerpts of the Research & Innovation Roadmap. Full references and in-depth analyses can be found in the full document.